How good is your

data?

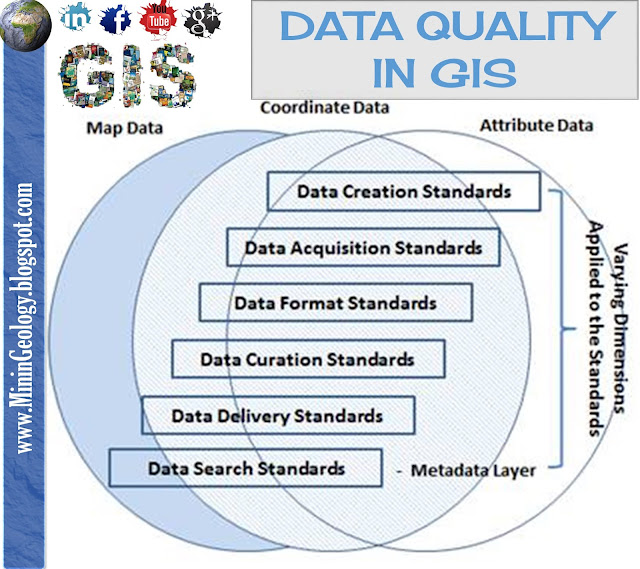

When using a GIS to analyse spatial data, there is sometimes a tendency to assume that all data, both locational and attribute, are completely accurate. This of course is never the case in reality. Whilst some steps can be taken to reduce the impact of certain types of error, they can never be completely eliminated. Generally speaking, the greater the degree of error in the data, the less reliable are the results of analyses based upon that data. This is sometimes referred to as GIGO (Garbage in Garbage Out). There is obviously a need to be aware of the limitations of the data and the implications this may have for subsequent analyses.

Data quality refers to how good the data are. An error is a departure from the correct data value. Data containing a lot of errors are obviously poor in quality.

Data quality parameters Key components of spatial data quality include positional accuracy (both horizontal and vertical), temporal accuracy (that the data is up to date), attribute accuracy (e.g. in labelling of features or of classifications), lineage (history of the data including sources), completeness (if the data set represents all related features of reality), and logical consistency (that the data is logically structured).

Data Completeness: Completeness refers to the degree to which data are missing - i.e. a complete set of data covers the study area and time period in its entirety. Sample data are by definition incomplete, so the main issue is the extent to which they provide a reliable indication of the complete set of data.

Data Consistency: Data consistency can be termed as the absence of conflicts in a particular database.

Data compatibility: The term compatibility indicates that it is reasonable to use two data sets together. Maps digitised from sources at different scales may be incompatible. For example, although GIS provides the technology for overlaying coverages digitised from maps at 1:10,000 and 1:250,000 scales, this would not be a very useful exercise due to differences in accuracy, precision and generalisation.

To ensure compatibility, data sets should be developed using the same methods of data capture, storage, manipulation and editing (collectively referred to as consistency). Inconsistencies may occur within a data set if the data were digitised by different people or from different sources (e.g. different map sheets, possibly surveyed at different times).

Data Applicability: The term applicability refers to the suitability of a particular data set for a particular purpose. For example, attribute data may become outdated and therefore unsuitable for modelling that particular attribute a few years later, especially if the attribute is likely to have changed in the interim.

Data Precision: Precision refers to the recorded level of detail. A distance recorded as 173.345 metres is more precise than if it is recorded as 173 metres. However, it is quite possible for data to be accurate (within a certain tolerance) without being precise. It is also possible to be precise without being accurate. Indeed, data recorded with a high degree of precision may give a misleading impression of accuracy.

Data Accuracy: Accuracy is the extent to which a measured data value approaches its true value. No dataset is 100 per cent accurate. Accuracy could be quantified using tolerance bands - i.e. the distance between two points might be given as 173 metres plus or minus 2 metres. These bands are generally expressed in probabilistic terms (i.e. 173 metres plus or minus 2 metres with 95 per cent confidence).

Currency: Is my data “up-to-date”? introduces a time dimension and refers to the extent to which the data have gone past their 'sell by' date. Administrative boundaries tend to exhibit a high degree of geographical inertia, but they are sometimes revised from time to time. Other features may change location on a more frequent basis: rivers may follow a different channel after flooding; roads may be straightened; the boundaries between different types of vegetation cover may change as a result of deforestation or natural ecological processes; and so forth. The attribute data associated with spatial features will also change with time.

These

components play an important role in assessment of data quality for several reasons:

1. Even when source data, such as official topographic

maps, have been subject to stringent quality control, errors are introduced

when these data are input to GIS.

2. Unlike a conventional map, which is essentially a

single product, a GIS database normally contains data from different sources of

varying quality.

3. Unlike topographic or cadastral databases, natural

resource databases contain data that are inherently uncertain and therefore not

suited to conventional quality control procedures.

4. Most GIS analysis operations will themselves

introduce errors.

Data Errors

It

is possible to make a further distinction between

errors in the source data and processing

errors resulting from spatial analysis and modelling operations carried out by the system on the base data. The nature of positional

errors that can arise during data collection

and compilation, including those occurring during digital data capture, are

generally well understood, and a variety of tried and tested techniques is

available to describe and evaluate them.

Data quality is especially critical in GIS, where errors in spatial data directly affect the reliability of analyses and outcomes. The saying ‘Garbage in, Garbage out’ highlights the importance of accurate data for effective GIS applications. Using a data governance framework can help address data quality issues by setting standards for consistency, compatibility, and accuracy, ensuring that data meets required thresholds. When integrating generative AI and data quality, having high-quality GIS data becomes even more essential, as AI-based models depend on precise, current, and complete data to deliver meaningful insights. This underscores the value of maintaining rigorous data standards across all GIS projects.

ReplyDeleteEnsuring data accuracy in GIS is crucial, as errors impact analysis reliability. Using privacy-enhancing technologies and insights from data management books can help mitigate risks and improve data integrity in spatial analysis.

Deletedata quality generative AI

ReplyDelete